The FuzzyLog: a partially ordered shared log Lockerman et al., OSDI’18

If you want to build a distributed system then having a distributed shared log as an abstraction to build upon — one that gives you an agreed upon total order for all events — is such a big help that it’s practically cheating! (See the “Can’t we all just agree” mini-series of posts for some of the background on consensus).

Services built over a shared log are simple, compact layers that map a high-level API to append/read operations on the shared log, which acts as the source of strong consistency, durability, failure atomicity, and transactional isolation. For example, a shared log version of ZooKeeper uses 1K lines of code, an order of magnitude lower than the original system.

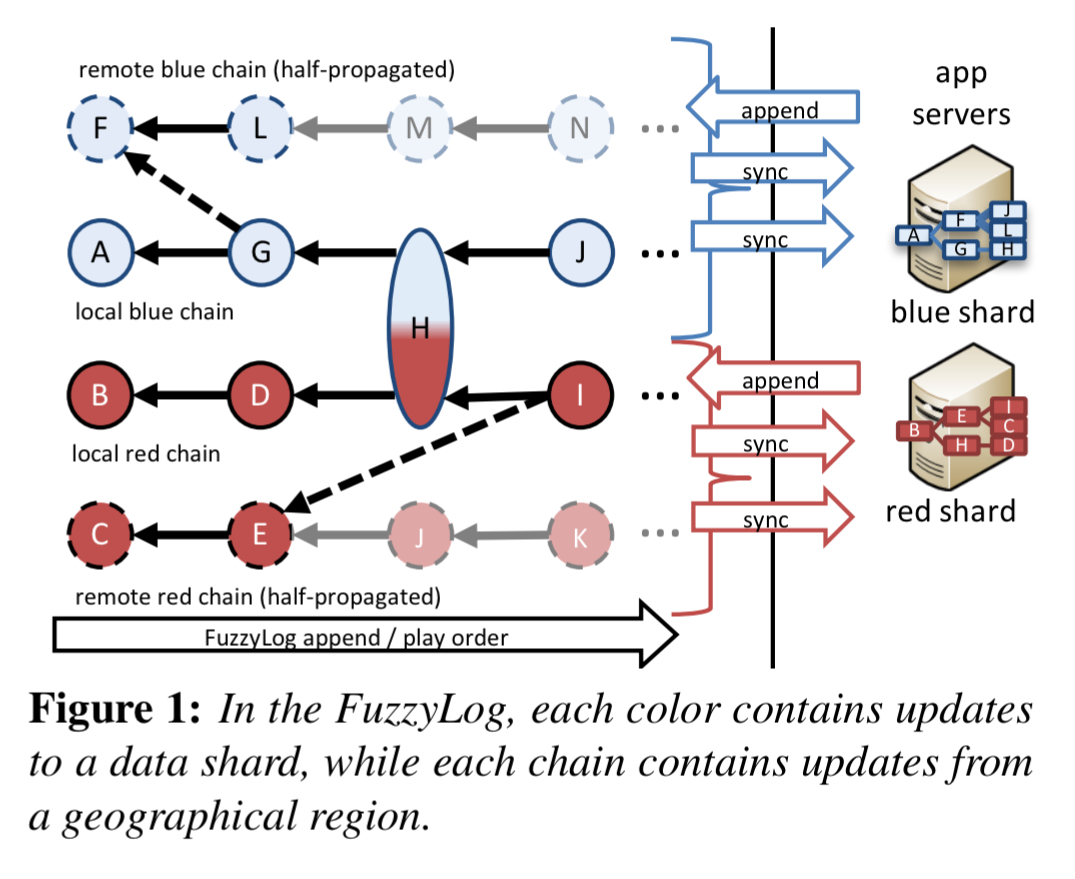

There’s a catch of course. System-wide total orders are expensive to maintain. Sometimes it may be impossible (e.g. in the event of a network partition). But perhaps we don’t always need a total ordering. Oftentimes for example causal consistency is strong enough. FuzzyLog aims to provide the simplicity of a shared log without imposing a total order: it provides partial ordering instead. It’s designed for a world of sharded, geo-replicated systems. The log is actually a directed acyclic graph made up of interlinked chains. A chain contains updates originating in a single geographic region. Nodes within the chains are coloured, with each colour representing updates to a single application level data shard.

(Note: when this paper talks about nodes, the authors mean nodes in the Log DAG, not system nodes (servers)).

Our evaluation shows that [applications built on top of FuzzyLog] are compact, fast, and flexible: they retain the simplicity (100s of lines of code) and strong semantics (durability and failure atomicity) of a shared log design while exploiting the partial order of the FuzzyLog for linear scalability, flexible consistency guarantees (e.g., causal+ consistency), and network partition tolerance. On a 6-node Dapple deployment [Dapple is a FuzzyLog implementation], our FuzzyLog-based ZooKeeper supports 3M/sec single-key writes, and 150K/sec atomic cross-shard renames.

The FuzzyLog API

Let’s take a closer look at the abstraction provided by FuzzyLog through its API. FuzzyLog is designed to support partitioning state into logical data shards, with concurrent processing of updates against different shards. It is also supports geo-replication with concurrent updates across regions (even to the same logical partition).

- Each node in the DAG is tagged with one or more colours, which divide an application’s state into logical shards. Nodes tagged with a colour correspond to updates against the corresponding logical shard.

- Each colour is a set of totally ordered chains, one per region, with cross-edges between them that indicate causality. Every region has a full (but potential stale) copy of each colour.

Clients interact with their own local region via the FuzzyLog API:

Operations to a single color across regions are causally consistent. In other words, two append operations to the same color issued by clients in different regions are only ordered if the node introduced by one of them has already been seen by the client issuing the other one…. Operations within a single region are serializable. All append and sync operations issued by clients within a region execute in a manner consistent with some serial execution. This serialization order is linearizable if the operations are to a single color within the region (i.e., on a single chain).

A client uses the sync call to synchronise its state. sync takes a snapshot of the set of nodes currently present at the local region’s copy of a color, and ‘plays’ all new nodes since the last sync invocation. (I.e., sync is moving clients from watermark to watermark in the totally ordered chain for the given colour in the given region).

Here’s an example of sync at work:

Building applications on top of FuzzyLog

Given the FuzzyLog abstraction, the authors give highlights of building a series of different systems on top of it. We are left to infer that this process is simple based on the very small number of lines of code required.

First up is a LogMap, which uses a single colour and a single region. Each server has a local in-memory copy of the map and continuously executes sync to keep its local view up to date. LogMap effectively runs over a single totally ordered shared log. It is implemented in just 193 lines of code, but its reliance on total order comes at the cost of scalability, performance, and availability.

To scale within a single region, we can add sharding (colours). ShardedMap is a trivial change to LogMap, requiring just that the colour parameter be set correctly on calls to the FuzzyLog.

If we need atomic operations across shards (with blind multi-put operations that don’t return a value), the AtomicMap (201 lines of code) can do this. It supports serializable (but not linearizable or strictly serializable) appends.

For full read/write transactions, TxMap at 417 lines of code can do the business. It tracks read-sets and buffers write-sets, and plays out a commit protocol through nodes in the log. TxMap provides strict serializability.

If we want to go across regions, then CRDTMap (284 lines of code) provides causal+ consistency based on the Observed-Remove set CRDT. We can make it transactional (TxCRDTMap by changing 80 LOC).

CRDTMap sacrifices consistency even when there is not partition in the system. CAPMap (424 LOC) provides strong consistency in the absence of network partitions, and causal consistency during them.

A ZooKeeper clone supporting linear scaling across shards and atomic cross-shard renames is 1881 LOC.

Introducing Dapple, a FuzzyLog implementation

Dapple is a distributed implementation of the FuzzyLog abstraction. It uses a collection of storage servers called chainservers (they store the per-colour chains, and also happen to use chain replication). Each chainserver stores multiple in-memory log-structured address spaces. The FuzzyLog state is partitioned across chainservers with each colour on a single partition. Persistent is achieved using the ‘lots of little batteries’ strategy (battery-backed DRAM).

If we stick to a single colour for a moment, then each region will have a single totally ordered chain. In addition to this latest copy of its own local chain, it also has potentially stale copies of the other region’s chains (shadow logs). Clients write to the local log, shadow logs are asynchronously replicated from other regions. Log appends use chain replication and are acknowledged by the tail. Snapshots requests are sent directly to the tail, and reads can be satisfied by any replica in the chain.

The FuzzyLog API supports append a node to multiple colours, which in Dapple equates to atomically appending a node to multiple logs, one log per colour:

…Dapple uses a classical total ordering protocol called Skeen’s algorithm (which is unpublished but described verbatim in other papers…) to consistently order appends… We add three fault-tolerance mechanisms —leases, fencing, and write-ahead logging— to produce a variant of Skeen’s that completes in two phases in a failure-free ‘fast’ path, but can safely recover if the origin client crashes.

Details of the protocol are given in §5.2 of the paper.

Evaluation

Tango and vCorfu are based on shared logs using a centralised sequencer. Compared to Tango, Dapple offers near linear scaling with 0% multi-colour appends, and holds its advantage over Tango until about 10%. At 100% multi-colour appends Tango wins because we’re back in the total order situation which Tango provides more efficiently.

When using multi-shard operations with AtomicMap, scalability and absolute throughput degrade gracefully as the percentage of multi-colour appends is steadily increased:

A ZooKeeper clone, DappleZK, is implemented in 1881 lines of Rust code. It partitions a namespace across a set of servers, each acting as a Dapple client, storing a partition of the namespace in in-memory data-structures backed by a FuzzyLog colour. Each DappleZK server is responsible for an independent shard of the ZooKeeper namespace. Rename operations move files from one DappleZK shard to another in a distributed transaction. A ZooKeeper deployment maintaining its state in DRAM is included for comparison.

We include the ZooKeeper comparison for completeness; we expect the FuzzyLog single-partition case to outperform ZooKeeper largely due to the different languages used (Rust vs Java) and the different between prototype and production-quality code.

(Here the assumption is that production quality code is slower due to all the checks-and-balances – versus being faster because it has been optimised).

The last word

The FuzzyLog abstraction— and its implementation in Dapple— extends the shared log approach to partial orders, allowing applications to scale linearly without sacrificing transactional guarantees, and switch seamlessly between these guarantees when the network partitions and heals. Crucially, applications can achieve these capabilities in hundreds of lines of code via simple, data-centric operations on the FuzzyLog, retaining the core simplicity of the shared log approach.

the morning paper published first on the morning paper

No comments:

Post a Comment